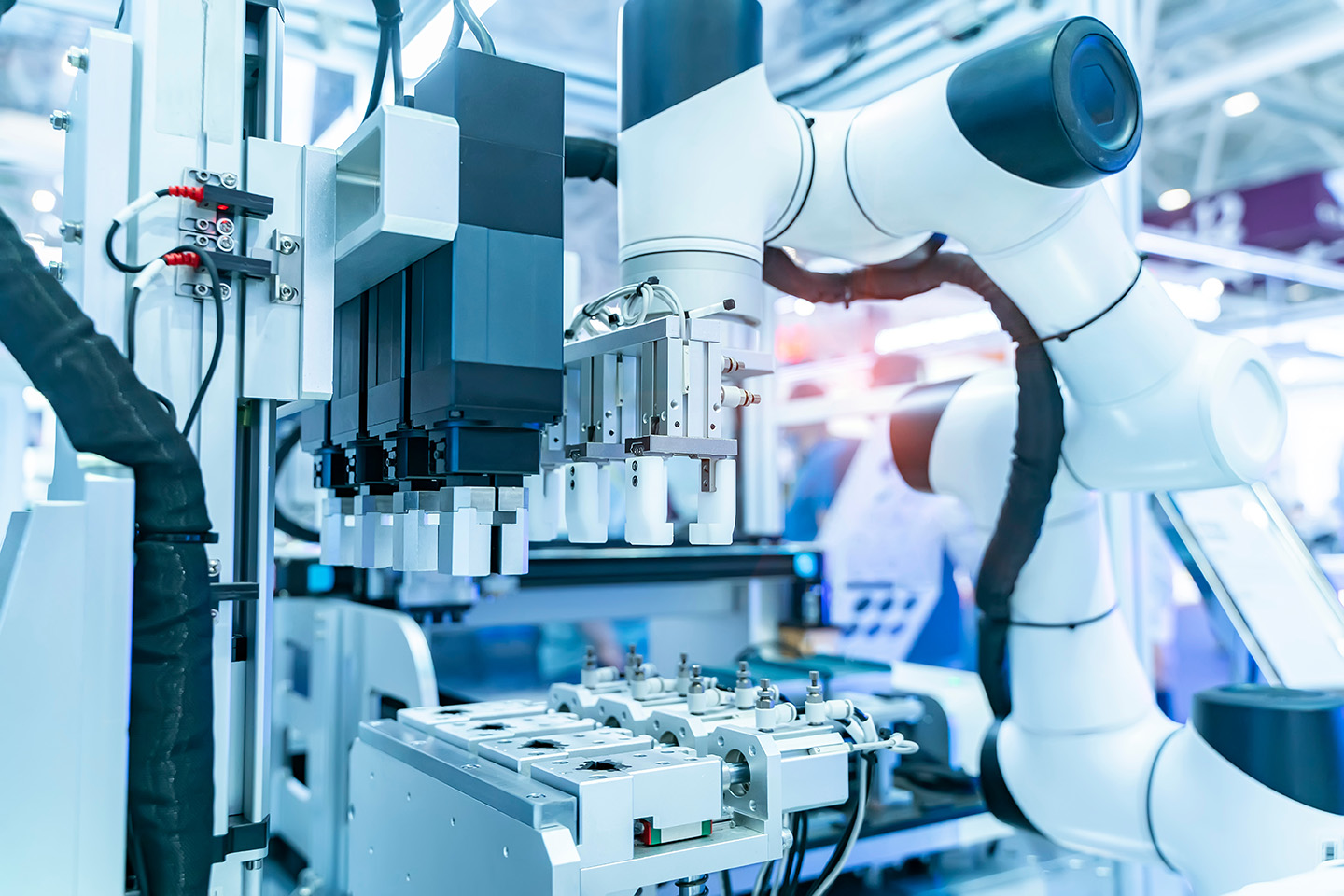

As leading talent consultants focused on AI and data roles in Dutch healthcare, pharma, and medtech, we’ve noticed a shift happening in hiring conversations, because while everyone is talking about models, performance, and pipelines, decision-makers are already worrying about something else.

That concern is the EU AI Act, which begins full enforcement for high-risk healthcare systems in 2026, and it’s already reshaping how teams are shaping their hiring, who gets hired and promoted.

What makes this moment different is that the AI Act doesn’t just regulate technology, which means it directly regulates people. As a result, the most valuable professionals in healthcare AI are no longer just strong engineers, but individuals who can keep systems compliant, explainable, and trusted in clinical environments. That shift explains why some data scientists are racing ahead into €120k+ roles, while others with equally strong technical skills remain stuck.