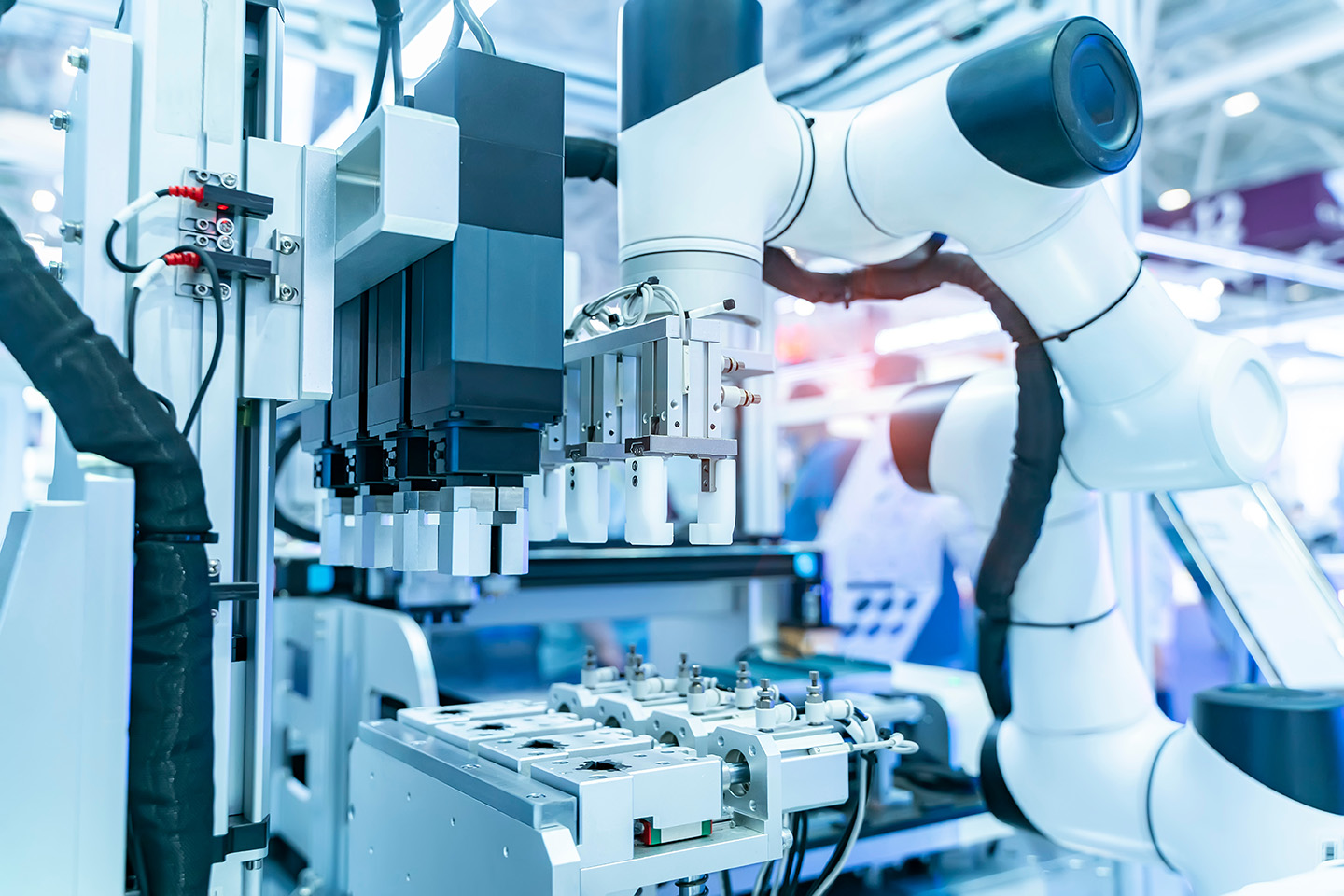

Across healthcare and life sciences, the EU AI Act is no longer a future consideration. For many organisations, the regulation itself is understood. What is far less clear is who inside the organisation actually owns compliance in practice.

2026 is the year when high-risk AI obligations stop being theoretical and start shaping delivery, timelines, and accountability. For medtech, pharma, and digital health leaders, the challenge is not interpreting the law, it is translating it into team design, decision rights, and hiring priorities before gaps surface at the worst possible moment.

The AI Act will not fail organisations on intent, it will fail them on capability.